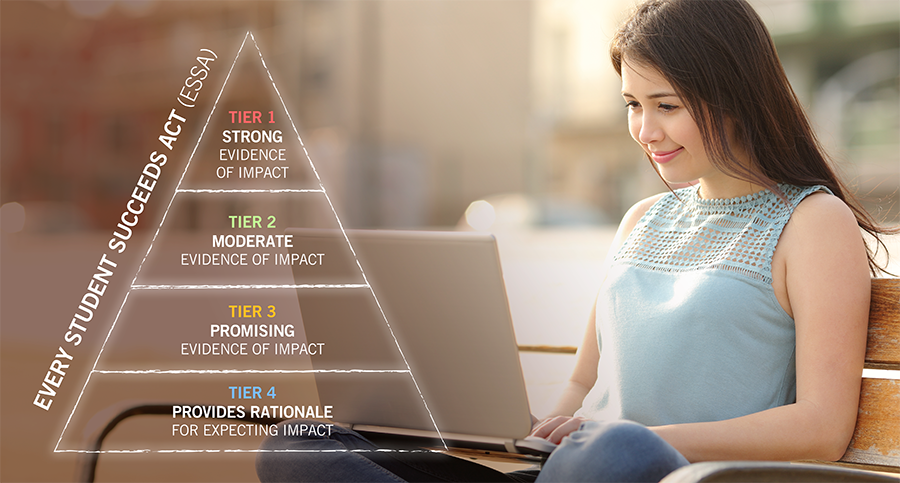

The Every Student Succeeds Act (ESSA) recognizes four different categories of evidence when it comes to selecting “evidence-based” strategies:

- Tier 1 includes strong evidence from an experimental study.

- Tier 2 includes moderate evidence from a quasi-experimental study.

- Tier 3 includes promising evidence from a statistically controlled, correlational study.

- Tier 4 demonstrates a rationale based on high-quality research findings or positive evaluation of likelihood to improve outcomes AND includes ongoing efforts to research an impact.

Although study designs will vary, strong, moderate, and promising evidence must show statistically significant effects on improving student outcomes. For all those interventions without the ability to show statistical significance from a high-quality study, ESSA’s fourth category actually paves the way for building this evidence.

Avoid these three popular misconceptions and how to avoid the typical “check the box” trap when it comes to ESSA evidence!

“The rigor of Tier 1 pretty much guarantees similarly positive and significant results.”

Whether it’s a diet pill, technology product, or reading intervention, buyer beware of any promise of “guaranteed results.” Tier 1 is a very high bar to meet because the research has to be experimental meaning students have to be randomized across intervention groups, and other factors that could impact results–like a student’s prior ability–must be identified and controlled.

Due to its rigorous criteria, Tier 1 or strong evidence, usually comes from highly curated research designs with very specific implementation criteria. The statistical significance of the study’s results boosts the likelihood that those results can be replicated in a different setting with a similar population and implementation. Still, if your population doesn’t match the participants or your implementation varies from the study, you may get very different results. Maybe even better! Or maybe not… Results will most likely fall into the “not better” bucket without vigilant fidelity of implementation.

With that, applying evidence-based interventions, strategies, and products on new student groups or use cases may very well contribute to the body of research necessary to understand what else could work and with whom.

“Interventions, strategies, and products in lower tiers are inferior to their counterparts in higher tiers.”

This one might actually be true, but that’s not necessarily the sole reason something falls into a lower tier. Yes, an intervention, strategy, or product may lack the type of results that cement its status in higher tiers, but in many cases, the difference between Tier 4 (demonstrates a rationale) and Tier 3 (aka promising evidence) is simply the implementation opportunity necessary to measure and disseminate results. Similarly, the opportunity to assign students randomly to intervention and control groups can sometimes be the primary barrier for crossing the threshold from Tier 2 into Tier 1.

It may also be the case that an intervention showed statistically significant increases based on a quasi-experimental (Tier 2) design but no statistical significance once the research spanned across multiple sites. Education research lacks the luxury of highly-controlled scientific experiments, but this simply underscores the need for more education partners and schools willing to continuously pilot innovation and share results.

“This falls into tier 4 because it hasn’t been able to show statistically significant growth or improvement.”

Actually, this one’s true! But keep in mind that every intervention, strategy, product, etc. – including those at Tier 1 – typically starts from some rationale before there’s been any opportunity to conduct scientific research. Sometimes, the right partner willing to participate in the necessary research just simply hasn’t presented itself.

It can seem risky to implement something considered “unproven”; however, all innovation comes from the willingness to pioneer the path toward promising, moderate, or strong evidence of effectiveness. ESSA has a lot of flexibility, but this does not mean open season for experimenting on kids. Often overlooked, ESSA requires any strategies, interventions, or products based on a demonstrated rationale to include ongoing efforts to measure efficacy. A simple logic model will not suffice; the real-time impact on students must remain front and center throughout the proving process.

As educators, we should be open to trying new, evidence-based strategies in an effort to contribute to what works with different types of learners in different settings. As educators in the digital world, however, we have to be more than just open. We have to make concerted efforts to build the body of strong, moderate, and promising strategies that will validate and amplify the impact of digital learning.

Original posted on Digital Learning Collaborative.

About the Author

About the Author

Yovhane Metcalfe, Ph.D., is StrongMind’s Vice President of Education Innovation overseeing the research and development of StrongMind’s digital learning solution. Yovhane uses her background in education research and assessment to keep StrongMind’s students and their families engaged in the online classroom.